Visual Transfer Learning for Robotic Manipulation

March 20, 2020

Posted by Yen-Chen Lin, Research Intern and Andy Zeng, Research Scientist, Robotics at Google

Quick links

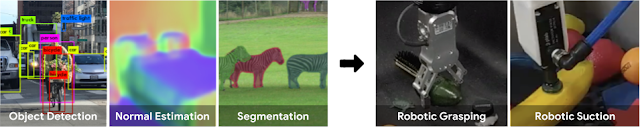

The idea that robots can learn to directly perceive the affordances of actions on objects (i.e., what the robot can or cannot do with an object) is called affordance-based manipulation, explored in research on learning complex vision-based manipulation skills including grasping, pushing, and throwing. In these systems, affordances are represented as dense pixel-wise action-value maps that estimate how good it is for the robot to execute one of several predefined motions at each location. For example, given an RGB-D image, an affordance-based grasping model might infer grasping affordances per pixel with a convolutional neural network. The grasping affordance value at each pixel would represent the success rate of performing a corresponding motion primitive (e.g. grasping action), which would then be executed by the robot at the position with the highest value.

|

| Overview of affordance-based manipulation. |

In “Learning to See before Learning to Act: Visual Pre-training for Manipulation”, a collaboration with researchers from MIT to be presented at ICRA 2020, we investigate whether existing pre-trained deep learning visual feature representations can improve the efficiency of learning robotic manipulation tasks, like grasping objects. By studying how we can intelligently transfer neural network weights between vision models and affordance-based manipulation models, we can evaluate how different visual feature representations benefit the exploration process and enable robots to quickly acquire manipulation skills using different grippers. We present practical techniques to pre-train deep learning models, which enable robots to learn to pick and grasp arbitrary objects in unstructured settings in less than 10 minutes of trial and error.

Affordance-based manipulation is essentially a way to reframe a manipulation task as a computer vision task, but rather than referencing pixels to object labels, we instead associate pixels to the value of actions. Since the structure of computer vision models and affordance models are so similar, one can leverage techniques from transfer learning in computer vision to enable affordance models to learn faster with less data. This approach re-purposes pre-trained neural network weights (i.e., feature representations) learned from large-scale vision datasets to initialize network weights of affordance models for robotic grasping.

In computer vision, many deep model architectures are composed of two parts: a “backbone” and a “head”. The backbone consists of weights that are responsible for early-stage image processing, e.g., filtering edges, detecting corners, and distinguishing between colors, while the head consists of network weights that are used in latter-stage processing, such as identifying high-level features, recognizing contextual cues, and executing spatial reasoning. The head is often much smaller than the backbone and is also more task specific. Hence, it is common practice in transfer learning to pre-train (e.g., on ResNet) and share backbone weights between tasks, while randomly initializing the weights of the model head for each new task.

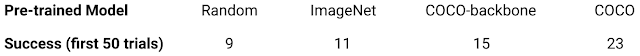

Following this recipe, we initialized our affordance-based manipulation models with backbones based on the ResNet-50 architecture and pre-trained on different vision tasks, including a classification model from ImageNet and a segmentation model from COCO. With different initializations, the robot was then tasked with learning to grasp a diverse set of objects through trial and error.

Initially, we did not see any significant gains in performance compared with training from scratch – grasping success rates on training objects were only able to rise to 77% after 1,000 trial and error grasp attempts, outperforming training from scratch by 2%. However, upon transferring network weights from both the backbone and the head of the pre-trained COCO vision model, we saw a substantial improvement in training speed – grasping success rates reached 73% in just 500 trial and error grasp attempts, and jumped to 86% by 1,000 attempts. In addition, we tested our model on new objects unseen during training and found that models with the pre-trained backbone from COCO generalize better. The grasping success rates reach 83% with pre-trained backbone alone and further improve to 90% with both pre-trained backbone and head, outperforming the 46% reached by a model trained from scratch.

In our experiments with the grasping robot, we observed that the distribution of successful grasps versus failures in the generated datasets was far more balanced when network weights from both the backbone and head of pre-trained vision models were transferred to the affordance models, as opposed to only transferring the backbone.

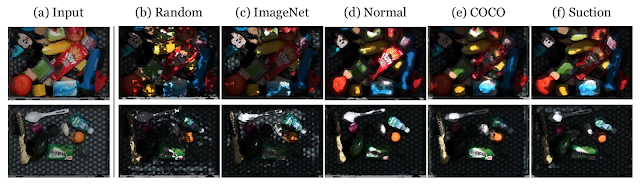

To better understand this, we visualize the neural activations that are triggered by different pre-trained models and a converged affordance model trained from scratch using a suction gripper. Interestingly, we find that the intermediate network representations learned from the head of vision models used for segmentation from the COCO dataset activate on objects in ways that are similar to the converged affordance model. This aligns with the idea that transferring as much of the vision model as possible (both backbone and head) can lead to more object-centric exploration by leveraging model weights that are better at picking up visual features and localizing objects.

|

| Affordances predicted by different models from images of cluttered objects (a). (b) Random refers to a randomly initialized model. (c) ImageNet is a model with backbone pre-trained on ImageNet and a randomly initialized head. (d) Normal refers to a model pre-trained to detect pixels with surface normals close to the anti-gravity axis. (e) COCO is the modified segmentation model (MaskRCNN) trained on the COCO dataset. (f) Suction is a converged model learned from robot-environment interactions using the suction gripper. |

Many of the methods that we use today for end-to-end robot learning are effectively the same as those being used for computer vision tasks. Our work here on visual pre-training illuminates this connection and demonstrates that it is possible to leverage techniques from visual pre-training to improve the learning efficiency of affordance-base manipulation applied to robotic grasping tasks. While our experiments point to a better understanding of deep learning for robots, there are still many interesting questions that have yet to be explored. For example, how do we leverage large-scale pre-training for additional modes of sensing (e.g. force-torque or tactile)? How do we extend these pre-training techniques towards more complex manipulation tasks that may not be as object-centric as grasping? These areas are promising directions for future research.

You can learn more about this work in the summary video below.

Acknowledgements

This research was done by Yen-Chen Lin (Ph.D. student at MIT), Andy Zeng, Shuran Song, Phillip Isola (faculty at MIT), and Tsung-Yi Lin, with special thanks to Johnny Lee and Ivan Krasin for valuable managerial support, Chad Richards for helpful feedback on writing, and Jonathan Thompson for fruitful technical discussions.

Quick links

×

❮

❯