Show and Tell: image captioning open sourced in TensorFlow

September 22, 2016

Posted by Chris Shallue, Software Engineer, Google Brain Team

Quick links

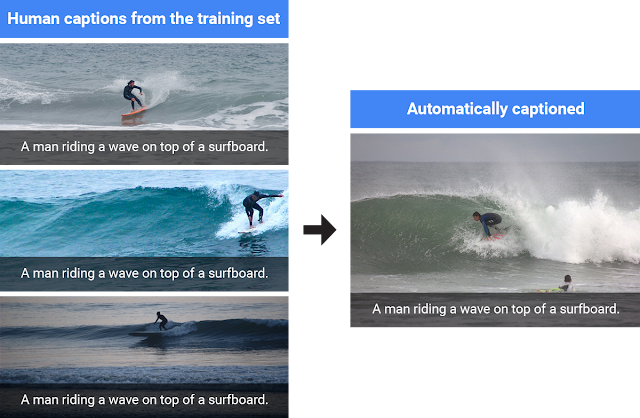

In 2014, research scientists on the Google Brain team trained a machine learning system to automatically produce captions that accurately describe images. Further development of that system led to its success in the Microsoft COCO 2015 image captioning challenge, a competition to compare the best algorithms for computing accurate image captions, where it tied for first place.

Today, we’re making the latest version of our image captioning system available as an open source model in TensorFlow. This release contains significant improvements to the computer vision component of the captioning system, is much faster to train, and produces more detailed and accurate descriptions compared to the original system. These improvements are outlined and analyzed in the paper Show and Tell: Lessons learned from the 2015 MSCOCO Image Captioning Challenge, published in IEEE Transactions on Pattern Analysis and Machine Intelligence.

|

| Automatically captioned by our system. |

Our 2014 system used the Inception V1 image classification model to initialize the image encoder, which produces the encodings that are useful for recognizing different objects in the images. This was the best image model available at the time, achieving 89.6% top-5 accuracy on the benchmark ImageNet 2012 image classification task. We replaced this in 2015 with the newer Inception V2 image classification model, which achieves 91.8% accuracy on the same task. The improved vision component gave our captioning system an accuracy boost of 2 points in the BLEU-4 metric (which is commonly used in machine translation to evaluate the quality of generated sentences) and was an important factor of its success in the captioning challenge.

Today’s code release initializes the image encoder using the Inception V3 model, which achieves 93.9% accuracy on the ImageNet classification task. Initializing the image encoder with a better vision model gives the image captioning system a better ability to recognize different objects in the images, allowing it to generate more detailed and accurate descriptions. This gives an additional 2 points of improvement in the BLEU-4 metric over the system used in the captioning challenge.

Another key improvement to the vision component comes from fine-tuning the image model. This step addresses the problem that the image encoder is initialized by a model trained to classify objects in images, whereas the goal of the captioning system is to describe the objects in images using the encodings produced by the image model. For example, an image classification model will tell you that a dog, grass and a frisbee are in the image, but a natural description should also tell you the color of the grass and how the dog relates to the frisbee.

In the fine-tuning phase, the captioning system is improved by jointly training its vision and language components on human generated captions. This allows the captioning system to transfer information from the image that is specifically useful for generating descriptive captions, but which was not necessary for classifying objects. In particular, after fine-tuning it becomes better at correctly describing the colors of objects. Importantly, the fine-tuning phase must occur after the language component has already learned to generate captions - otherwise, the noisiness of the randomly initialized language component causes irreversible corruption to the vision component. For more details, read the full paper here.

DistBelief software framework. The TensorFlow implementation released today achieves the same level of accuracy with significantly faster performance: time per training step is just 0.7 seconds in TensorFlow compared to 3 seconds in DistBelief on an Nvidia K20 GPU, meaning that total training time is just 25% of the time previously required.

A natural question is whether our captioning system can generate novel descriptions of previously unseen contexts and interactions. The system is trained by showing it hundreds of thousands of images that were captioned manually by humans, and it often re-uses human captions when presented with scenes similar to what it’s seen before.

|

| When the model is presented with scenes similar to what it’s seen before, it will often re-use human generated captions. |

|

| Our model generates a completely new caption using concepts learned from similar scenes in the training set. |

Quick links

×

❮

❯