Project Ihmehimmeli: Temporal Coding in Spiking Neural Networks

September 18, 2019

Posted by Iulia-Maria Comșa and Krzysztof Potempa, Research Engineers, Google Research, Zürich

Quick links

The discoveries being made regularly in neuroscience are an ongoing source of inspiration for creating more efficient artificial neural networks that process information in the same way as biological organisms. These networks have recently achieved resounding success in domains ranging from playing board and video games to fine-grained understanding of video. However, there is one fundamental aspect of biological brains that artificial neural networks are not yet fully leveraging: temporal encoding of information. Preserving temporal information allows a better representation of dynamic features, such as sounds, and enables fast responses to events that may occur at any moment. Furthermore, despite the fact that biological systems can consist of billions of neurons, information can be carried by a single signal (‘spike’) fired by an individual neuron, with information encoded in the timing of the signal itself.

Based on this biological insight, project Ihmehimmeli explores how artificial spiking neural networks can exploit temporal dynamics using various architectures and learning settings. “Ihmehimmeli” is a Finnish tongue-in-cheek word for a complex tool or a machine element whose purpose is not immediately easy to grasp. The essence of this word captures our aim to build complex recurrent neural network architectures with temporal encoding of information. We use artificial spiking networks with a temporal coding scheme, in which more interesting or surprising information, such as louder sounds or brighter colours, causes earlier neuronal spikes. Along the information processing hierarchy, the winning neurons are those that spike first. Such an encoding can naturally implement a classification scheme where input features are encoded in the spike times of their corresponding input neurons, while the output class is encoded by the output neuron that spikes earliest.

|

| The Ihmehimmeli project team holding a himmeli, a symbol for the aim to build recurrent neural network architectures with temporal encoding of information. |

We trained the network on classic machine learning benchmarks, with features encoded in time. The spiking network successfully learned to solve noisy Boolean logic problems and achieved a test accuracy of 97.96% on MNIST, a result comparable to conventional fully connected networks with the same architecture. However, unlike conventional networks, our spiking network uses an encoding that is in general more biologically-plausible, and, for a small trade-off in accuracy, can compute the result in a highly energy-efficient manner, as detailed below.

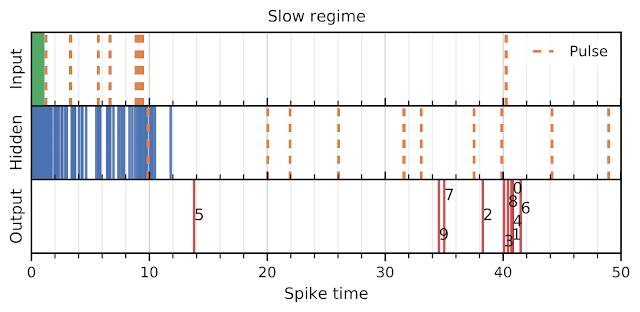

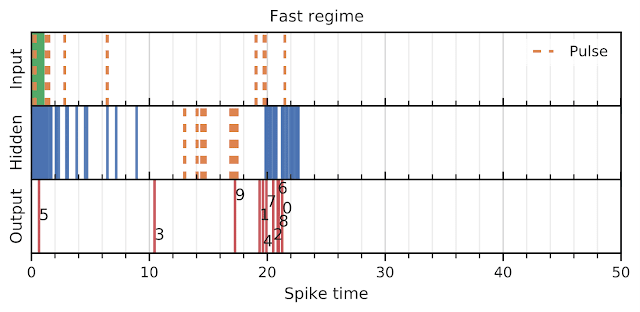

While training the spiking network on MNIST, we observed the neural network spontaneously shift between two operating regimes. Early during training, the network exhibited a slow and highly accurate regime, where almost all neurons fired before the network made a decision. Later in training, the network spontaneously shifted into a fast but slightly less accurate regime. This behaviour was intriguing, as we did not optimize for it explicitly. Thus spiking networks can, in a sense, be “deliberative”, or make a snap decision on the spot. This is reminiscent of the trade-off between speed and accuracy in human decision-making.

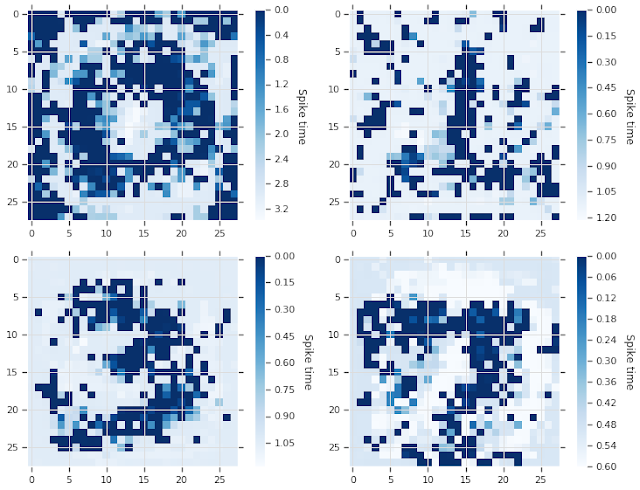

recover representations of the digits learned by the spiking network by gradually adjusting a blank input image to maximize the response of a target output neuron. This indicates that the network learns human-like representations of the digits, as opposed to other possible combinations of pixels that might look “alien” to people. Having interpretable representations is important in order to understand what the network is truly learning and to prevent a small change in input from causing a large change in the result.

|

| How the network “imagines” the digits 0, 1, 3 and 7. |

Acknowledgements

The work described here was authored by Iulia Comsa, Krzysztof Potempa, Luca Versari, Thomas Fischbacher, Andrea Gesmundo and Jyrki Alakuijala. We are grateful for all discussions and feedback on this work that we received from our colleagues at Google.

Quick links

×

❮

❯