In 2016, we welcomed the inaugural class of the Google Brain Residency, a select group of 27 individuals participating in a 12-month program focused on jump-starting a career in machine learning and deep learning research. Since then, the program has experienced rapid growth, leading to its evolution into the Google AI Residency, which serves to provide residents the opportunity to embed themselves within the broader group of Google teams working on machine learning research and its applications.

The accomplishments of the second class of residents are as remarkable as those of the first, publishing multiple works to various top-tier machine learning, robotics and healthcare conferences and journals. Publication topics include:

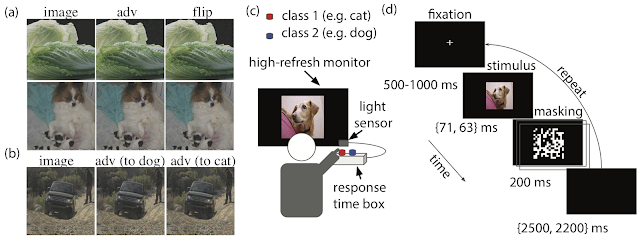

- A study on the effect of adversarial examples on human visual perception.

- An algorithm that enables robots to learn more safely by avoiding states from which they cannot reset.

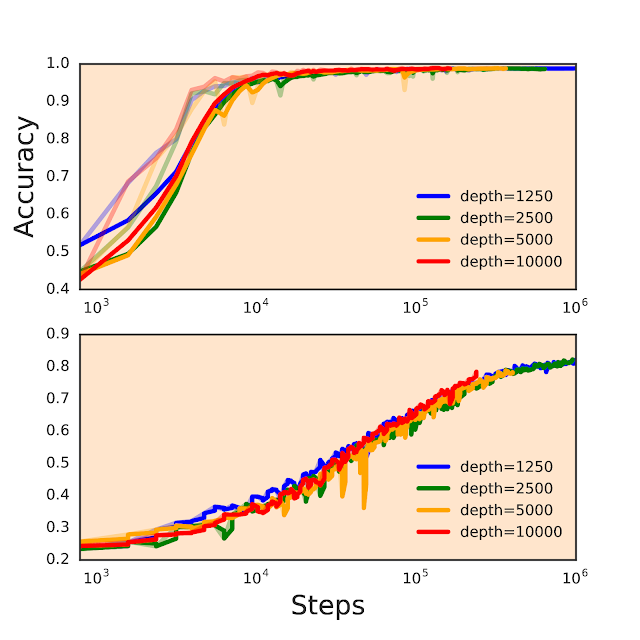

- Initialization methods which enable training of neural network with unprecedented depths of 10K+ layers.

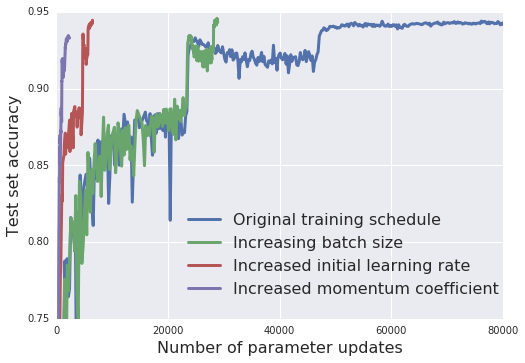

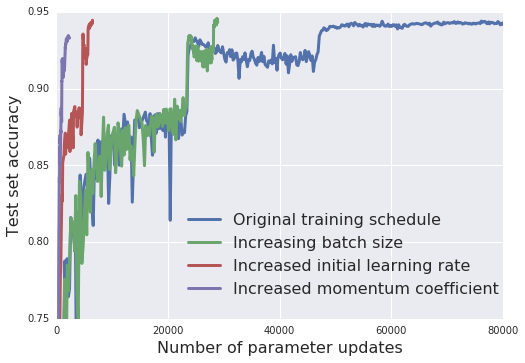

- A method to make training more scalable by using larger mini-batches, which when applied to ResNet-50 on ImageNet reduced training time without compromising test accuracy.

- And many more...

|

| Applying a sequence of simple scaling rules, we increase the SGD batch size and reduce the number of parameter updates required to train our model by an order of magnitude, without sacrificing test set accuracy. This enables us to dramatically reduce model training time. For more details, see “Don’t Decay the Learning Rate, Increase the Batch Size”, accepted at ICLR 2018. |

With the 2017 class of Google AI residents graduated and off to pursue the next exciting phase in their careers, their desks were quickly filled in June by the 2018 class. Furthermore, this new class is the first to be embedded in various teams across Google’s global offices, pursuing research in areas such as perception, algorithms and optimization, language, healthcare and much more. We look forward to seeing what they can accomplish and contribute to the broader research community!