Highlights from the 3rd Cohort of the Google AI Residency Program

November 7, 2019

Posted by Katie Meckley, Program Manager, Google AI Residency

Quick links

This fall marks the successful conclusion for the third cohort of the Google AI Residency Program. Started in 2016 with 27 individuals in Mountain View, CA, the 12-month program has grown to nearly 100 residents from nine locations across the globe. Program participants have gone on to great success in PhD programs, academia, non-profits, and industry. Many have also become full-time Google researchers.

The program’s latest installment was our most successful yet, as residents advanced progress in a broad range of research fields, such as machine perception, algorithms and optimization, language understanding, healthcare and many more. Below are a handful of innovative projects from some of this year’s alumni.

- A large-scale study on cross-lingual transfer in massive multilingual neural machine translation models (recently highlighted as part of this post), trained on billions of sentence pairs from more than 100 languages in order to significantly improve translation for both low- and high-resource languages.

- A generative model for Scalable Vector Graphics (SVGs), which can be used to aid designers in generating fonts.

- A method to learn GANs using discrepancy divergence, a measure that accounts for both the loss function and hypothesis set to provide theoretical learning guarantees.

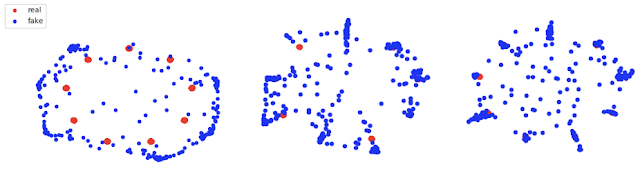

|

| As more generators are added to the DGAN ensemble more modes in the real distribution are covered. From left to right: 1 generator, 5 generators, and 10 generators. |

- A likelihood ratio method for deep generative models that effectively corrects for confounding background statistics to improve out-of-distribution (OOD) detection, and a new benchmark dataset for OOD detection in genomics.

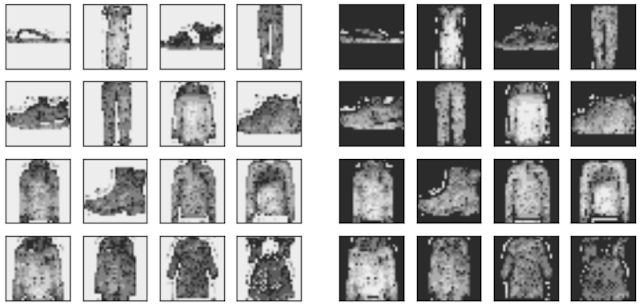

|

| Log-likelihood (left) and log likelihood-ratio (right) of each pixel for Fashion-MNIST. The likelihood is dominated by the “background” pixels, whereas the likelihood ratio focuses on the “semantic” pixels and is thus better for OOD detection. |

- A study showing when label smoothing helps, focusing on its impact on calibration of predictions, representations learned by the penultimate layer and effectiveness of knowledge distillation.

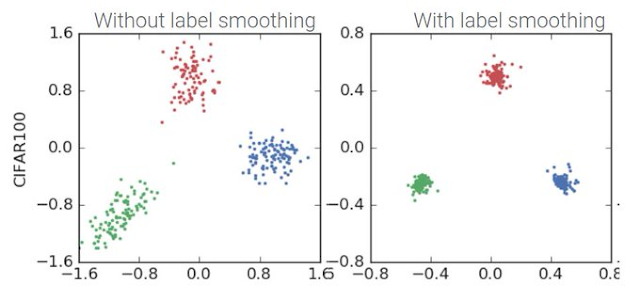

|

| 2D-projection of representations of three CIFAR100 classes. Without label smoothing, examples are spread, but with label smoothing each example is encouraged to be equally distant to the clusters of the other classes, attenuating intra-class variation and inter-class similarity structure. |

The successes of our AI residents go beyond academic publishing. Their achievements include:

- Organizing a workshop, bringing together experts in theoretical physics and deep learning, to explore how tools from physics can shed light on the theory of deep learning.

- Founding Queer in AI, an organization for fostering a community of queer researchers and raising awareness of queer issues in AI/ML.

- Organizing a hands-on Tensorflow tutorial on using Deep Learning for Natural Language Processing.

- Automatically learning neural net architectures with AdaNet, an open-source, TensorFlow-based framework.

- Developing Coconet, the model behind the first AI-powered Doodle (created to celebrate renowned German composer and musician Johann Sebastian Bach).

Also, beginning with the next program cycle, residents will be hosted for a duration of 12 months, with the option of extending up to 18 months! This exciting shift comes as part of our effort to improve the overall program experience and outcomes for residents as the program continues to grow and scale.

-

Labels:

- Education Innovation