Using Deep Learning to Create Professional-Level Photographs

July 13, 2017

Posted by Hui Fang, Software Engineer, Machine Perception

Quick links

Machine learning (ML) excels in many areas with well defined goals. Tasks where there exists a right or wrong answer help with the training process and allow the algorithm to achieve its desired goal, whether it be correctly identifying objects in images or providing a suitable translation from one language to another. However, there are areas where objective evaluations are not available. For example, whether a photograph is beautiful is measured by its aesthetic value, which is a highly subjective concept.

|

| A professional(?) photograph of Jasper National Park, Canada. |

Training the Model

While aesthetics can be modelled using datasets like AVA, using it naively to enhance photos may miss some aspect in aesthetics, such as making a photo over-saturated. Using supervised learning to learn multiple aspects in aesthetics properly, however, may require a labelled dataset that is intractable to collect.

Our approach relies only on a collection of professional quality photos, without before/after image pairs, or any additional labels. It breaks down aesthetics into multiple aspects automatically, each of which is learned individually with negative examples generated by a coupled image operation. By keeping these image operations semi-”orthogonal”, we can enhance a photo on its composition, saturation/HDR level and dramatic lighting with fast and separable optimizations:

|

| A panorama (a) is cropped into (b), with saturation and HDR strength enhanced in (c), and with dramatic mask applied in (d). Each step is guided by one learned aspect of aesthetics. |

Results

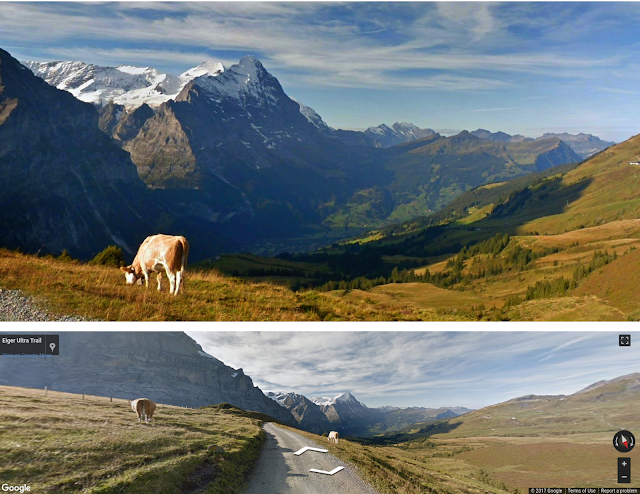

Some creations of our system from Google Street View are shown below. As you can see, the application of the trained aesthetic filters creates some dramatic results (including the image we started this post with!):

|

| Jasper National Park, Canada. |

|

| Interlaken, Switzerland. |

|

| Park Parco delle Orobie Bergamasche, Italy. |

|

| Jasper National Park, Canada. |

To judge how successful our algorithm was, we designed a “Turing-test”-like experiment: we mix our creations with other photos at different quality, and show them to several professional photographers. They were instructed to assign a quality score for each of them, with meaning defined as following:

- 1: Point-and-shoot without consideration for composition, lighting etc.

- 2: Good photos from general population without a background in photography. Nothing artistic stands out.

- 3: Semi-pro. Great photos showing clear artistic aspects. The photographer is on the right track of becoming a professional.

- 4: Pro.

|

| Scores received from professional photographers for photos with different predicted scores. |

The Street View panoramas served as a testing bed for our project. Someday this technique might even help you to take better photos in the real world. We compiled a showcase of photos created to our satisfaction. If you see a photo you like, you can click on it to bring out a nearby Street View panorama. Would you make the same decision if you were there holding the camera at that moment?

Acknowledgements

This work was done by Hui Fang and Meng Zhang from Machine Perception at Google Research. We would like to thank Vahid Kazemi for his earlier work in predicting AVA scores using Inception network, and Sagarika Chalasani, Nick Beato, Bryan Klingner and Rupert Breheny for their help in processing Google Street View panoramas. We would like to thank Peyman Milanfar, Tomas Izo, Christian Szegedy, Jon Barron and Sergey Ioffe for their helpful reviews and comments. Huge thanks to our anonymous professional photographers!

Quick links

×

❮

❯