Teaching machines to read between the lines (and a new corpus with entity salience annotations)

August 25, 2014

Posted by Dan Gillick, Research Scientist, and Dave Orr, Product Manager

Quick links

Language understanding systems are largely trained on freely available data, such as the Penn Treebank, perhaps the most widely used linguistic resource ever created. We have previously released lots of linguistic data ourselves, to contribute to the language understanding community as well as encourage further research into these areas.

Now, we’re releasing a new dataset, based on another great resource: the New York Times Annotated Corpus, a set of 1.8 million articles spanning 20 years. 600,000 articles in the NYTimes Corpus have hand-written summaries, and more than 1.5 million of them are tagged with people, places, and organizations mentioned in the article. The Times encourages use of the metadata for all kinds of things, and has set up a forum to discuss related research.

We recently used this corpus to study a topic called “entity salience”. To understand salience, consider: how do you know what a news article or a web page is about? Reading comes pretty easily to people -- we can quickly identify the places or things or people most central to a piece of text. But how might we teach a machine to perform this same task? This problem is a key step towards being able to read and understand an article.

One way to approach the problem is to look for words that appear more often than their ordinary rates. For example, if you see the word “coach” 5 times in a 581 word article, and compare that to the usual frequency of “coach” -- more like 5 in 330,000 words -- you have reason to suspect the article has something to do with coaching. The term “basketball” is even more extreme, appearing 150,000 times more often than usual. This is the idea of the famous TFIDF, long used to index web pages.

|

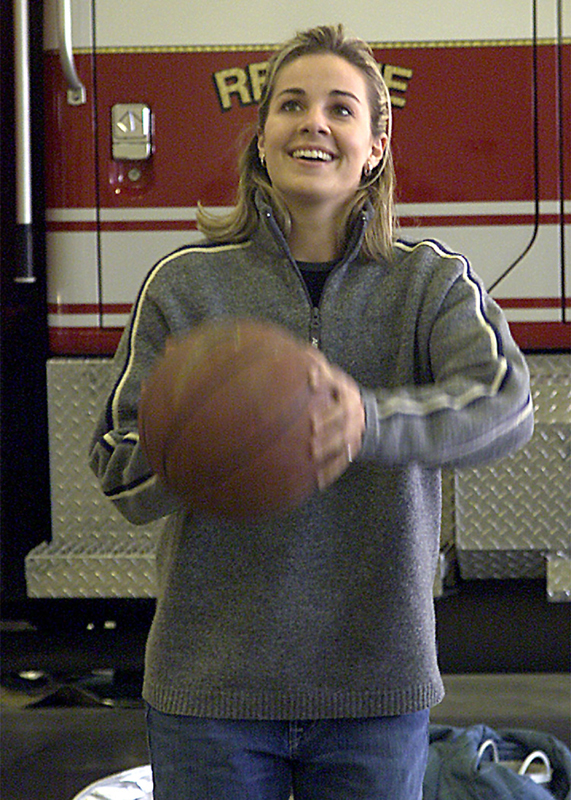

| Congratulations to Becky Hammon, first female NBA coach! Image via Wikipedia. |

Background information about entities ought to help us decide which of them are most salient. After all, an article’s author assumes her readers have some general understanding of the world, and probably a bit about sports too. Using background knowledge, we might be able to infer that the WNBA is a salient entity in the Becky Hammon article even though it only appears once.

To encourage research on leveraging background information, we are releasing a large dataset of annotations to accompany the New York Times Annotated Corpus, including resolved Freebase entity IDs and labels indicating which entities are salient. The salience annotations are determined by automatically aligning entities in the document with entities in accompanying human-written abstracts. Details of the salience annotations and some baseline results are described in our recent paper: A New Entity Salience Task with Millions of Training Examples (Jesse Dunietz and Dan Gillick).

Since our entity resolver works better for named entities like WNBA than for nominals like “coach” (this is the notoriously difficult word sense disambiguation problem, which we’ve previously touched on), the annotations are limited to names.

Below is sample output for a document. The first line contains the NYT document ID and the headline; each subsequent line includes an entity index, an indicator for salience, the mention count for this entity in the document as determined by our coreference system, the text of the first mention of the entity, the byte offsets (start and end) for the first mention of the entity, and the resolved Freebase MID.

Features like mention count and document positioning give reasonable salience predictions. But because they only describe what’s explicitly in the document, we expect a system that uses background information to expose what’s implicit could give better results.

Download the data directly from Google Drive, or visit the project home page with more information at our Google Code site. We look forward to seeing what you come up with!

Quick links

×

❮

❯