Soli Radar-Based Perception and Interaction in Pixel 4

March 12, 2020

Posted by Jaime Lien, Research Engineer and Nicholas Gillian, Software Engineer, Google Advanced Technology and Projects

Quick links

The Pixel 4 and Pixel 4 XL are optimized for ease of use, and a key feature helping to realize this goal is Motion Sense, which enables users to interact with their Pixel in numerous ways without touching the device. For example, with Motion Sense you can use specific gestures to change music tracks or instantly silence an incoming call. Motion Sense additionally detects when you're near your phone and when you reach for it, allowing your Pixel to be more helpful by anticipating your actions, such as by priming the camera to provide a seamless face unlock experience, politely lowering the volume of a ringing alarm as you reach to dismiss it, or turning off the display to save power when you’re no longer near the device.

The technology behind Motion Sense is Soli, the first integrated short-range radar sensor in a consumer smartphone, which facilitates close-proximity interaction with the phone without contact. Below, we discuss Soli’s core radar sensing principles, design of the signal processing and machine learning (ML) algorithms used to recognize human activity from radar data, and how we resolved some of the integration challenges to prepare Soli for use in consumer devices.

Designing the Soli Radar System for Motion Sense

The basic function of radar is to detect and measure properties of remote objects based on their interactions with radio waves. A classic radar system includes a transmitter that emits radio waves, which are then scattered, or redirected, by objects within their paths, with some portion of energy reflected back and intercepted by the radar receiver. Based on the received waveforms, the radar system can detect the presence of objects as well as estimate certain properties of these objects, such as distance and size.

Radar has been under active development as a detection and ranging technology for almost a century. Traditional radar approaches are designed for detecting large, rigid, distant objects, such as planes and cars; therefore, they lack the sensitivity and resolution for sensing complex motions within the requirements of a consumer handheld device. Thus, to enable Motion Sense, the Soli team developed a new, small-scale radar system, novel sensing paradigms, and algorithms from the ground up specifically for fine-grained perception of human interactions.

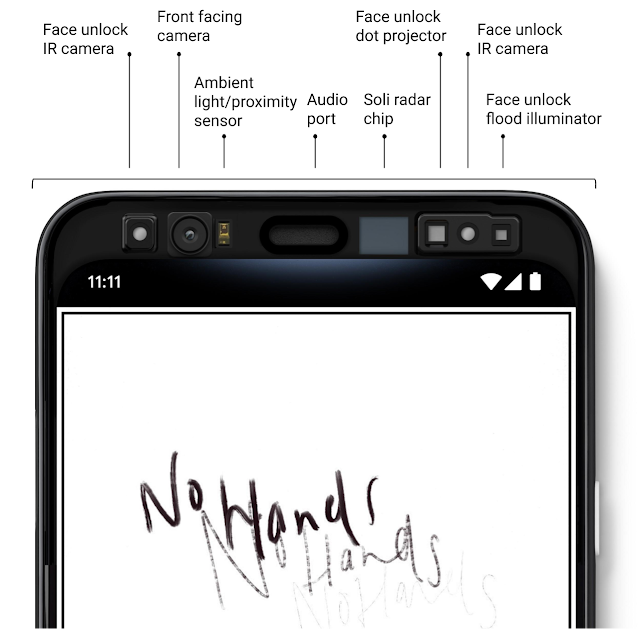

Classic radar designs rely on fine spatial resolution relative to target size in order to resolve different objects and distinguish their spatial structures. Such spatial resolution typically requires broad transmission bandwidth, narrow antenna beamwidth, and large antenna arrays. Soli, on the other hand, employs a fundamentally different sensing paradigm based on motion, rather than spatial structure. Because of this novel paradigm, we were able to fit Soli’s entire antenna array for Pixel 4 on a 5 mm x 6.5 mm x 0.873 mm chip package, allowing the radar to be integrated in the top of the phone. Remarkably, we developed algorithms that specifically do not require forming a well-defined image of a target’s spatial structure, in contrast to an optical imaging sensor, for example. Therefore, no distinguishable images of a person’s body or face are generated or used for Motion Sense presence or gesture detection.

|

| Soli’s location in Pixel 4. |

The animations below demonstrate how different actions exhibit distinctive motion features in the processed Soli signal. The vertical axis of each image represents range, or radial distance, from the sensor, increasing from top to bottom. The horizontal axis represents velocity toward or away from the sensor, with zero at the center, negative velocities corresponding to approaching targets on the left, and positive velocities corresponding to receding targets on the right. Energy received by the radar is mapped into these range-velocity dimensions and represented by the intensity of each pixel. Thus, strongly reflective targets tend to be brighter relative to the surrounding noise floor compared to weakly reflective targets. The distribution and trajectory of energy within these range-velocity mappings show clear differences for a person walking, reaching, and swiping over the device.

In the left image, we see reflections from multiple body parts appearing on the negative side of the velocity axis as the person approaches the device, then converging at zero velocity at the top of the image as the person stops close to the device. In the middle image depicting a reach, a hand starts from a stationary position 20 cm from the sensor, then accelerates with negative velocity toward the device, and finally decelerates to a stop as it reaches the device. The reflection corresponding to the hand moves from the middle to the top of the image, corresponding to the hand’s decreasing range from the sensor over the course of the gesture. Finally, the third image shows a hand swiping over the device, moving with negative velocity toward the sensor on the left half of the velocity axis, passing directly over the sensor where its radial velocity is zero, and then away from the sensor on the right half of the velocity axis, before reaching a stop on the opposite side of the device.

|

| Left: Presence - Person walking towards the device. Middle: Reach - Person reaching towards the device. Right: Swipe - Person swiping over the device. |

The signal processing pipeline we designed for Soli includes a combination of custom filters and coherent integration steps that boost signal-to-noise ratio, attenuate unwanted interference, and differentiate reflections off a person from noise and clutter. These signal processing features enable Soli to operate at low-power within the constraints of a consumer smartphone.

Designing Machine Learning Algorithms for Radar

After using Soli’s signal processing pipeline to filter and boost the original radar signal, the resulting signal transformations are fed to Soli’s ML models for gesture classification. These models have been trained to accurately detect and recognize the Motion Sense gestures with low latency.

There are two major research challenges to robustly classifying in-air gestures that are common to any motion sensing technology. The first is that every user is unique and performs even simple motions, such as a swipe, in a myriad of ways. The second is that throughout the day, there may be numerous extraneous motions within the range of the sensor that may appear similar to target gestures. Furthermore, when the phone moves, the whole world looks like it’s moving from the point of view of the motion sensor in the phone.

Solving these challenges required designing custom ML algorithms optimized for low-latency detection of in-air gestures from radar signals. Soli’s ML models consist of neural networks trained using millions of gestures recorded from thousands of Google volunteers. These radar recordings were mixed with hundreds of hours of background radar recordings from other Google volunteers containing generic motions made near the device. Soli’s ML models were trained using TensorFlow and optimized to run directly on Pixel’s low-power digital signal processor (DSP). This allows us to run the models at low power, even when the main application processor is powered down.

Taking Soli from Concept to Product

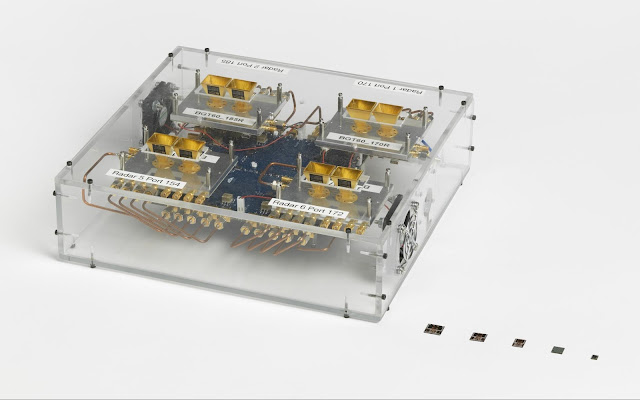

Soli’s integration into the Pixel smartphone was possible because the end-to-end radar system — including hardware, software, and algorithms — was carefully designed to enable touchless interaction within the size and power constraints of consumer devices. Soli’s miniature hardware allowed the full radar system to fit into the limited space in Pixel’s upper bezel, which was a significant team accomplishment. Indeed, the first Soli prototype in 2014 was the size of a desktop computer. We combined hardware innovations with our novel temporal sensing paradigm described earlier in order to shrink the entire radar system down to a single 5.0 mm x 6.5 mm RFIC, including antennas on package. The Soli team also introduced several innovative hardware power management schemes and optimized Soli’s compute cycles, enabling Motion Sense to fit within the power budget of the smartphone.

|

| Hardware innovations included iteratively shrinking the radar system from a desktop-sized prototype to a single 5.0 mm x 6.5 mm RFIC, including antennas on package. |

|

| Vibration due to audio on Pixel 4 appearing as an artifact in Soli’s range-doppler signal representation. |

The successful integration of Soli into Pixel 4 and Pixel 4 XL devices demonstrates for the first time the feasibility of radar-based machine perception in an everyday mobile consumer device. Motion Sense in Pixel devices shows Soli’s potential to bring seamless context awareness and gesture recognition for explicit and implicit interaction. We are excited to continue researching and developing Soli to enable new radar-based sensing and perception capabilities.

Acknowledgments

The work described above was a collaborative effort between Google Advanced Technology and Projects (ATAP) and the Pixel and Android product teams. We particularly thank Patrick Amihood for major contributions to this blog post.

-

Labels:

- Machine Perception

- Product

Quick links

×

❮

❯