Open Source Vizier: Towards reliable and flexible hyperparameter and blackbox optimization

February 2, 2023

Posted by Xingyou (Richard) Song, Research Scientist, and Chansoo Lee, Software Engineer, Google Research, Brain Team

Quick links

Google Vizier is the de-facto system for blackbox optimization over objective functions and hyperparameters across Google, having serviced some of Google’s largest research efforts and optimized a wide range of products (e.g., Search, Ads, YouTube). For research, it has not only reduced language model latency for users, designed computer architectures, accelerated hardware, assisted protein discovery, and enhanced robotics, but also provided a reliable backend interface for users to search for neural architectures and evolve reinforcement learning algorithms. To operate at the scale of optimizing thousands of users’ critical systems and tuning millions of machine learning models, Google Vizier solved key design challenges in supporting diverse use cases and workflows, while remaining strongly fault-tolerant.

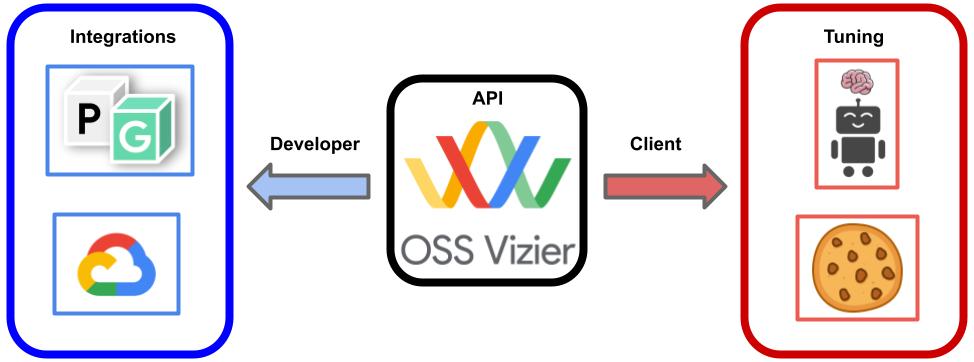

Today we are excited to announce Open Source (OSS) Vizier (with an accompanying systems whitepaper published at AutoML Conference 2022), a standalone Python package based on Google Vizier. OSS Vizier is designed for two main purposes: (1) managing and optimizing experiments at scale in a reliable and distributed manner for users, and (2) developing and benchmarking algorithms for automated machine learning (AutoML) researchers.

System design

OSS Vizier works by having a server provide services, namely the optimization of blackbox objectives, or functions, from multiple clients. In the main workflow, a client sends a remote procedure call (RPC) and asks for a suggestion (i.e., a proposed input for the client’s blackbox function), from which the service begins to spawn a worker to launch an algorithm (i.e., a Pythia policy) to compute the following suggestions. The suggestions are then evaluated by clients to form their corresponding objective values and measurements, which are sent back to the service. This pipeline is repeated multiple times to form an entire tuning trajectory.

The use of the ubiquitous gRPC library, which is compatible with most programming languages, such as C++ and Rust, allows maximum flexibility and customization, where the user can also write their own custom clients and even algorithms outside of the default Python interface. Since the entire process is saved to an SQL datastore, a smooth recovery is ensured after a crash, and usage patterns can be stored as valuable datasets for research into meta-learning and multitask transfer-learning methods such as the OptFormer and HyperBO.

Usage

Because of OSS Vizier’s emphasis as a service, in which clients can send requests to the server at any point in time, it is thus designed for a broad range of scenarios — the budget of evaluations, or trials, can range from tens to millions, and the evaluation latency can range from seconds to weeks. Evaluations can be done asynchronously (e.g., tuning an ML model) or in synchronous batches (e.g., wet lab settings involving multiple simultaneous experiments). Furthermore, evaluations may fail due to transient errors and be retried, or may fail due to persistent errors (e.g., the evaluation is impossible) and should not be retried.

This broadly supports a variety of applications, which include hyperparameter tuning deep learning models or optimizing non-computational objectives, which can be e.g., physical, chemical, biological, mechanical, or even human-evaluated, such as cookie recipes.

Integrations, algorithms, and benchmarks

As Google Vizier is heavily integrated with many of Google’s internal frameworks and products, OSS Vizier will naturally be heavily integrated with many of Google’s open source and external frameworks. Most prominently, OSS Vizier will serve as a distributed backend for PyGlove to allow large-scale evolutionary searches over combinatorial primitives such as neural architectures and reinforcement learning algorithms. Furthermore, OSS Vizier shares the same client-based API with Vertex Vizier, allowing users to quickly swap between open-source and production-quality services.

For AutoML researchers, OSS Vizier is also outfitted with a useful collection of algorithms and benchmarks (i.e., objective functions) unified under common APIs for assessing the strengths and weaknesses of proposed methods. Most notably, via TensorFlow Probability, researchers can now use the JAX-based Gaussian Process Bandit algorithm, based on the default algorithm in Google Vizier that tunes internal users’ objectives.

Resources and future direction

We provide links to the codebase, documentation, and systems whitepaper. We plan to allow user contributions, especially in the form of algorithms and benchmarks, and further integrate with the open-source AutoML ecosystem. Going forward, we hope to see OSS Vizier as a core tool for expanding research and development over blackbox optimization and hyperparameter tuning.

Acknowledgements

OSS Vizier was developed by members of the Google Vizier team in collaboration with the TensorFlow Probability team: Setareh Ariafar, Lior Belenki, Emily Fertig, Daniel Golovin, Tzu-Kuo Huang, Greg Kochanski, Chansoo Lee, Sagi Perel, Adrian Reyes, Xingyou (Richard) Song, and Richard Zhang.

In addition, we thank Srinivas Vasudevan, Jacob Burnim, Brian Patton, Ben Lee, Christopher Suter, and Rif A. Saurous for further TensorFlow Probability integrations, Daiyi Peng and Yifeng Lu for PyGlove integrations, Hao Li for Vertex/Cloud integrations, Yingjie Miao for AutoRL integrations, Tom Hennigan, Varun Godbole, Pavel Sountsov, Alexey Volkov, Mihir Paradkar, Richard Belleville, Bu Su Kim, Vytenis Sakenas, Yujin Tang, Yingtao Tian, and Yutian Chen for open source and infrastructure help, and George Dahl, Aleksandra Faust, Claire Cui, and Zoubin Ghahramani for discussions.

Finally we thank Tom Small for designing the animation for this post.