Introducing a New Foveation Pipeline for Virtual/Mixed Reality

December 5, 2017

Posted by Behnam Bastani, Software Engineer Manager and Eric Turner, Software Engineer, Daydream

Quick links

Virtual Reality (VR) and Mixed Reality (MR) offer a novel way to immerse people into new and compelling experiences, from gaming to professional training. However, current VR/MR technologies present a fundamental challenge: to present images at the extremely high resolution required for immersion places enormous demands on the rendering engine and transmission process. Headsets often have insufficient display resolution, which can limit the field of view, worsening the experience. But, to drive a higher resolution headset, the traditional rendering pipeline requires significant processing power that even high-end mobile processors cannot achieve. As research continues to deliver promising new techniques to increase display resolution, the challenges of driving those displays will continue to grow.

In order to further improve the visual experience in VR and MR, we introduce a pipeline that takes advantage of the characteristics of human visual perception to enable an amazing visual experience at low compute and power cost. The pipeline proposed in this article considers the full system dependency including the rendering engine, memory bandwidth and capability of display module itself. We determined that the current limitation is not just in the content creation, but it also may be in transmitting data, handling latency and enabling interaction with real objects (mixed reality applications). The pipeline consists of 1. Foveated Rendering with a focus on reducing of compute per pixel. 2. Foveated Image Processing with a focus on the reduction of visual artifacts and 3. Foveated Transmission with a focus on bits per pixel transmitted.

Foveated Rendering

In the human visual system, the fovea centralis allows us to see at high-fidelity in the center of our vision, allowing our brain to pay less attention to things in our peripheral vision. Foveated rendering takes advantage of this characteristic to improve the performance of the rendering engine by reducing the spatial or bit-depth resolution of objects in our peripheral vision. To make this work, the location of the High Acuity (HA) region needs to be updated with eye-tracking to align with eye saccades, which preserves the perception of a constant high-resolution across the field of view. In contrast, systems with no eye-tracking may need to render a much larger HA region.

|

| The left image is rendered at full resolution. The right image uses two layers of foveation — one rendered at high resolution (inside the yellow region) and one at lower resolution (outside). |

|

| A smooth full rendering (image on the left). The image on the right shows temporal artifacts introduced by motion in foveated region. |

Phase-Aligned Rendering

Aliasing occurs in the Low-Acuity (LA) region during foveated rendering due to the subsampling of rendered content. In traditional foveated rendering discussed above, these aliasing artifacts flicker from frame to frame, since the display pixel grid moves across the virtual scene as the user moves their head. The motion of these pixels relative to the scene cause any existing aliasing artifacts to flicker, which is highly perceptible to the user, even in the periphery.

In Phase-Aligned rendering, we force the LA region frustums to be aligned rotationally to the world (e.g. always facing north, east, south, etc.), not the current frame's head-rotation. The aliasing artifacts are mostly invariant to head pose and therefore much less detectable. After upsampling, these regions are then reprojected onto the final display screen to compensate for the user's head rotation, which reduces temporal flicker. As with traditional foveation, we render the high-acuity region in a separate pass, and overlay it onto the merged image at the location of the fovea. The figure below compares traditional foveated rendering with phase-aligned rendering, both at the same level of foveation.

|

| Temporal artifacts in non-world aligned foveated rendered content (left) and the phase-aligned method (right). |

Conformal Rendering

Another approach for foveated rendering is to render content in a space that matches the smoothly varying reduction in resolution of our visual acuity, based on a nonlinear mapping of the screen distance from the visual fixation point.

This method gives two main benefits. First, by more closely matching the visual fidelity fall-off of the human eye, we can reduce the total number of pixels computed compared to other foveation techniques. Second, by using a smooth fall-off in fidelity, we prevent the user from seeing a clear dividing line between High-Acuity and Low-Acuity, which is often one of the first artifacts that is noticed. These benefits allow for aggressive foveation to be used while preserving the same quality levels, yielding more savings.

We perform this method by warping the vertices of the virtual scene into non-linear space. This scene is then rasterized at a reduced resolution, then unwarped into linear space as a post-processing effect combined with lens distortion correction.

Foveated Image Processing

HMDs often require image processing steps to be performed after rendering, such as local tone mapping, lens distortion correction, or lighting blending. With foveated image-processing, different operations are applied for different foveation regions. As an example, lens distortion correction, including chromatic aberration correction, may not require the same spatial accuracy for each part of the display. By running lens distortion correction on foveated content before upscaling, significant savings are gained in computation. This technique does not introduce perceptible artifacts.

|

| Correction for head-mounted-display lens chromatic aberration in foveated space. Top image shows the conventional pipeline. The bottom image (in Green) shows the operation in the foveated space. |

Foveated Transmission

A non-trivial source of power consumption for standalone HMDs is data transmission from the system-on-a-chip (SoC) to the display module. Foveated transmission aims to save power and bandwidth by transmitting the minimum amount of data necessary to the display as shown in figure below.

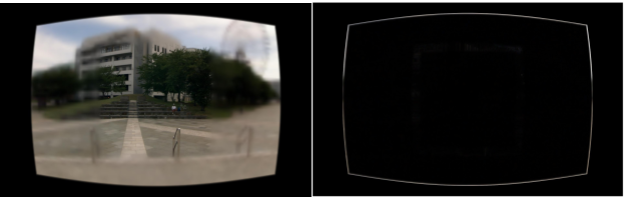

|

| Rather than streaming upscaled foveated content (left image), foveated transmission enables streaming content pre-reconstruction (right image) and reducing the number of bits transmitted. |

|

| Comparison of full integration of foveation and compression techniques (left) versus typical flickering artifacts that may be introduced by applying DSC to foveated content (right). |

We have focused on a few components of a “foveation pipeline” for MR and VR applications. By considering the impact of foveation in every part of a display system — rendering, processing and transmission — we can enable the next generation of lightweight, low-power, and high resolution MR/VR HMDs. This topic has been an active area of research for many years and it seems reasonable to expect the appearance of VR and MR headsets with foveated pipelines in the coming years.

Acknowledgements

We would like to recognize the work done by the following collaborators:

- Haomiao Jiang and Carlin Vieri on display compression and foveated transmission

- Brian Funt and Sylvain Vignaud on the development of new foveated rendering algorithms

Quick links

×

❮

❯