Enabling E-Textile Microinteractions: Gestures and Light through Helical Structures

May 15, 2020

Posted by Alex Olwal, Research Scientist, Google Research

Quick links

Textiles have the potential to help technology blend into our everyday environments and objects by improving aesthetics, comfort, and ergonomics. Consumer devices have started to leverage these opportunities through fabric-covered smart speakers and braided headphone cords, while advances in materials and flexible electronics have enabled the incorporation of sensing and display into soft form factors, such as jackets, dresses, and blankets.

|

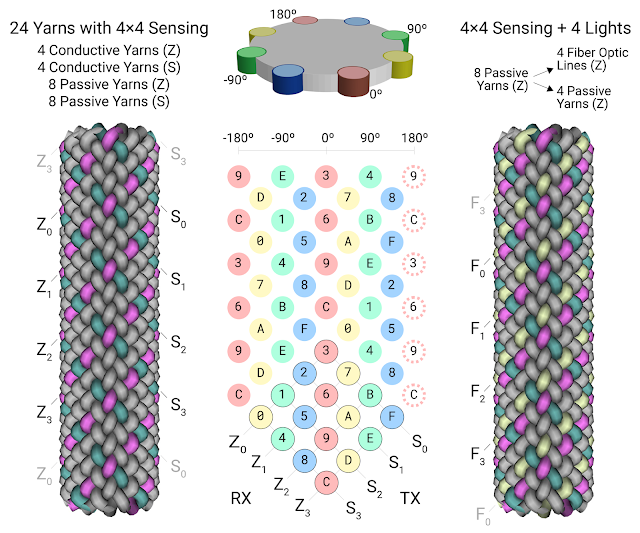

| A scalable interactive E-textile architecture with embedded touch sensing, gesture recognition and visual feedback. |

For insight into how this works, please see this video about E-textile microinteractions and this video about the E-textile architecture.

|

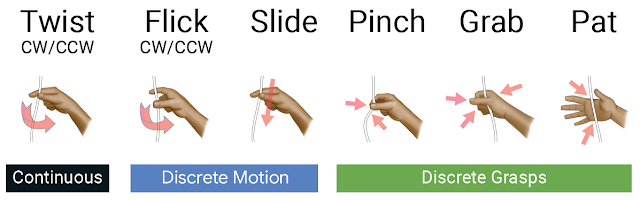

| E-textile microinteractions combining continuous sensing with discrete motion and grasps. |

Braiding generally refers to the diagonal interweaving of three or more material strands. While braids are traditionally used for aesthetics and structural integrity, they can also be used to enable new sensing and display capabilities.

Whereas cords can be made to detect basic touch gestures through capacitive sensing, we developed a helical sensing matrix (HSM) that enables a larger gesture space. The HSM is a braid consisting of electrically insulated conductive textile yarns and passive support yarns,where conductive yarns in opposite directions take the role of transmit and receive electrodes to enable mutual capacitive sensing. The capacitive coupling at their intersections is modulated by the user’s fingers, and these interactions can be sensed anywhere on the cord since the braided pattern repeats along the length.

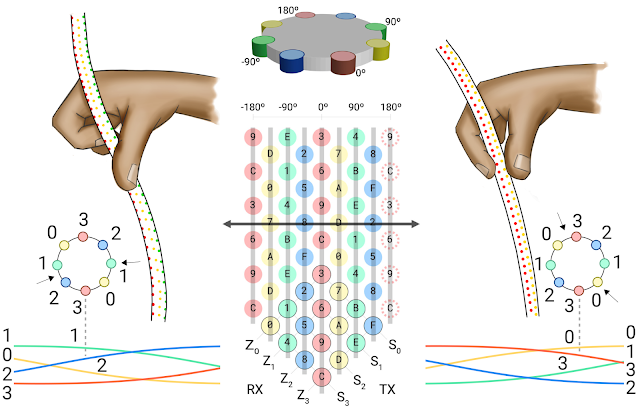

A key insight is that the two axial columns in an HSM that share a common set of electrodes (and color in the diagram of the flattened matrix) are 180º opposite each other. Thus, pinching and rolling the cord activates a set of electrodes and allows us to track relative motion across these columns. Rotation detection identifies the current phase with respect to the set of time-varying sinusoidal signals that are offset by 90º. The braid allows the user to initiate rotation anywhere, and is scalable with a small set of electrodes.

|

| Rotation is deduced from horizontal finger motion across the columns. The plots below show the relative capacitive signal strengths, which change with finger proximity. |

This e-textile architecture makes the cord touch-sensitive, but its softness and malleability limit suitable interactions compared to rigid touch surfaces. With the unique material in mind, our design guidelines emphasize:

- Simple gestures. We design for short interactions where the user either makes a single discrete gesture or performs a continuous manipulation.

- Closed-loop feedback. We want to help the user discover functionality and get continuous feedback on their actions. Where possible, we provide visual, tactile, and audio feedback integrated in the device.

|

| Our e-textile enables interaction based on capacitive sensing of proximity, contact area, contact time, roll, and pressure. |

|

| Braided fiber optics strands create the illusion of directional motion. |

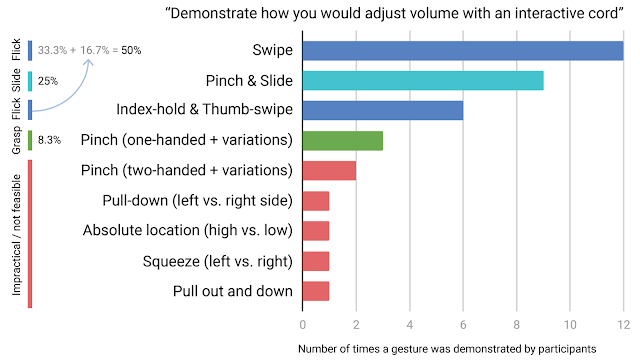

We conducted a gesture elicitation study, which showed opportunities for an expanded gesture set. Inspired by these results, we decided to investigate five motion gestures based on flicks and slides, along with single-touch gestures (pinch, grab and pat).

|

| Gesture elicitation study with imagined touch sensing. |

Notable here is that inherent relationships in the repeated sensing matrices are well-suited for machine learning classification. The ML classifier used in our research enables quick training with limited data, which makes a user-dependent interaction system reasonable. In our experience, training for a typical gesture takes less than 30s, which is comparable to the amount of time required to train a fingerprint sensor.

User-Independent, Continuous Twist: Quantifying Precision and Speed

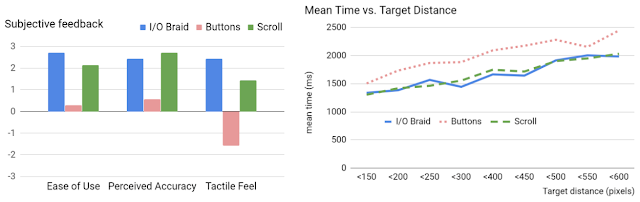

The per-user trained gesture recognition enabled eight new discrete gestures. For continuous interactions, we also wanted to quantify how well user-independent, continuous twist performs for precision tasks. We compared our e-textile with two baselines, a capacitive multi-touch trackpad (“Scroll”) and the familiar headphone cord remote control (“Buttons”). We designed a lab study where the three devices controlled 1D movement in a targeting task.

We analysed three dependent variables for the 1800 trials, covering 12 participants and three techniques: time on task (milliseconds), total motion, and motion during end-of-trial. Participants also provided qualitative feedback through rankings and comments.

Our quantitative analysis suggests that our e-textile’s twisting is faster than existing headphone button controls and comparable in speed to a touch surface. Qualitative feedback also indicated a preference for e-textile interaction over headphone controls.

|

| Left: Weighted average subjective feedback. We mapped the 7-point Likert scale to a score in the range [-3, 3] and multiplied by the number of times the technique received that rating, and computed an average for all the scores. Right: Mean completion times for target distances show that Buttons were consistently slower. |

Gesture Prototypes: Headphones, Hoodie Drawstrings, and Speaker Cord

We developed different prototypes to demonstrate the capabilities of our e-textile architecture: e-textile USB-C headphones to control media playback on the phone, a hoodie drawstring to invisibly add music control to clothing, and an interactive cord for gesture controls of smart speakers.

|

| Left: Tap = Play/Pause; Center: Double-tap = Next track; Right: Roll = Volume +/- |

|

| Interactive speaker cord for simultaneous use of continuous (twisting/rolling) and discrete gestures (pinch/pat) to control music playback. |

We introduce an interactive e-textile architecture for embedded sensing and visual feedback, which can enable both precise small-scale and large-scale motion in a compact cord form factor. With this work, we hope to advance textile user interfaces and inspire the use of microinteractions for future wearable interfaces and smart fabrics, where eyes-free access and casual, compact and efficient input is beneficial. We hope that our e-textile will inspire others to augment physical objects with scalable techniques, while preserving industrial design and aesthetics.

Acknowledgements

This work is a collaboration across multiple teams at Google. Key contributors to the project include Alex Olwal, Thad Starner, Jon Moeller, Greg Priest-Dorman, Ben Carroll, and Gowa Mainini. We thank the Google ATAP Jacquard team for our collaboration, especially Shiho Fukuhara, Munehiko Sato, and Ivan Poupyrev. We thank Google Wearables, and Kenneth Albanowski and Karissa Sawyer, in particular. Finally, we would like to thank Mark Zarich for illustrations, Bryan Allen for videography, Frank Li for data processing, Mathieu Le Goc for valuable discussions, and Carolyn Priest-Dorman for textile advice.

Quick links

×

❮

❯